AI Applications

The Thinking Partner: Your Complete Guide to Reasoning Models

Author

Jan 20, 2025

I recently read a fantastic blog by Ben hylak on Latent space about how he used to start using reasoning models effectively after initial frustration.

I had a very similar learning curve when I started using 01. I also treated it like a regular chat model (Claude/GPT) and got frustrated. Through trial and error, I discovered that its true strength lies in structured, deliberate interaction.

Rather than seeing LLMs as simple response generators, I've consistently approached them as a tool for thought - tools that enhance our thinking capabilities With reasoning models, I began treating them more as thinking partners. They're powerful tools that enhance our ability to solve problems and generate insights.

With reasoning models once I evolved from casual conversations to strategic prompting, I started treating them more as thinking partners than mere assistants. This intentional approach transformed my results, enhancing my ability to solve problems and generate deeper insights.

How Reasoning Models are different ?

What makes these new reasoning models (O1, DeepSeek) different is their ability to mimic one of humanity's most valuable cognitive traits: deliberate thought.

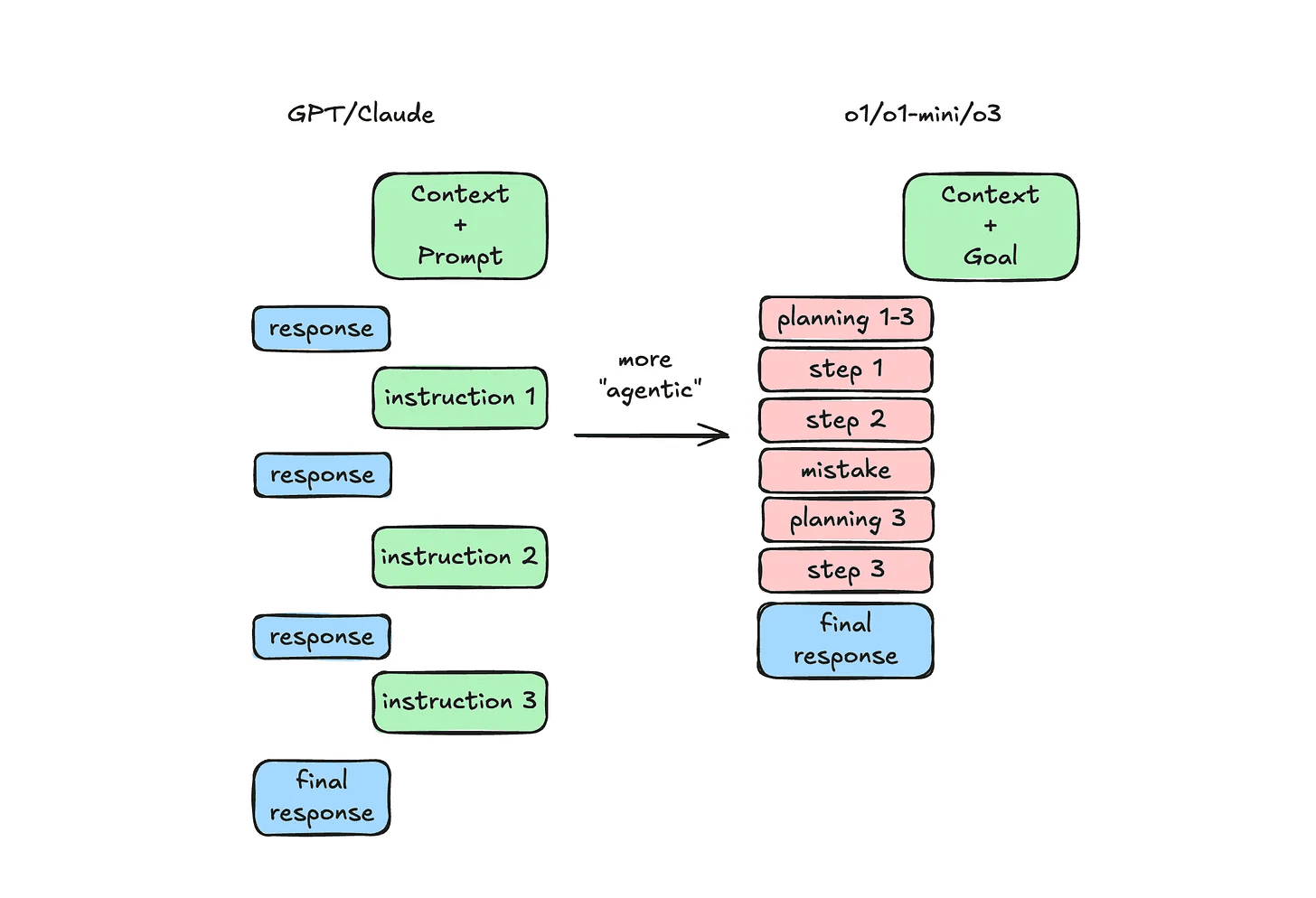

Unlike traditional chat models, these systems actively evaluate multiple approaches through maintained reasoning chains, preserving crucial intermediate steps to ensure logical coherence in a sophisticated problem-solving process:

They pause to analyse the complete scope of a problem

They consider multiple solution strategies

They evaluate and refine their approach

They learn from unsuccessful attempts

Source: https://www.latent.space/p/o1-skill-issue

Why reasoning models requires a different approach than regular chat models

The distinction between reasoning models and chat models isn't just about complexity – it's about how they approach problem-solving.

The key isn't choosing the "better" model - it's understanding your specific needs. Chat models handle fluid conversation, while reasoning models tackle systematic analysis. Choosing between them requires understanding their unique strengths and the specific demands of your use case.

Your interaction style should align with each model's core strengths. While chat models thrive on contextual dialogue, reasoning models perform best when given well-defined parameters and explicit problem statements

This guide will help you with when and how to use reasoning models more effectively.

A practical guide to using reasoning models

1. Move from simple prompts to detailed instructions

Unlike conversational AI like Claude or GPT-4, O1 or other reasoning isn't designed for iterative exchanges. Think of it as a Junior Intern who needs comprehensive context upfront rather than a chat partner.

With chat models, you often start with a quick question and minimal context, gradually refining your query based on the model's output and feedback. Chat models excel at extracting context through back-and-forth dialogue.

In contrast, reasoning models operates on a 'context-first' principle - taking your inputs at face value without seeking clarification. This means you need to provide complete information initially for meaningful results.

Even for seemingly simple queries, provide O1 with:

A Full Problem Background

Share all prior attempts and their outcomes, including what worked and what didn’t.

Comprehensive Data

Attach database schemas, system architecture details, or relevant technical setups.

Organizational Context

Explain your company’s purpose, scale, and any industry-specific terminology or internal jargon.

Practical Example: Chat Model vs. Reasoning Model Approaches

❌ Chat Model Style (Insufficient for Reasoning Models):

✅ Reasoning Model Brief (Proper Context):

Full-context approach ensures that O1 has everything it needs to produce a well-reasoned, actionable output in a single pass, without relying on iterative clarification.

Even when asking a straightforward technical question:

Describe all prior methods you explored that failed to resolve the issue.

Include relevant technical context e.g: system setup, data structures, or configurations

Provide a detailed description of your organization’s goals, scale, and any specific terminology unique to your company.

Approach O1 as you would a capable but new colleague who needs onboarding. While skilled at analysis, O1 can sometimes misread task complexity - overcomplicating simple requests or exploring tangents that may not align with your goals, hence detailed context becomes super important.

2. Let model think: Stop micromanaging

Unlike chat models where we specify the approach ('act as a senior developer'), O1 works best when given clear objectives instead of methodology. Focus on defining what you want to achieve - O1 will determine the most efficient path to get there, often with less need for step-by-step guidance.

Shift from How to what : Apply CLEAR Framework

C - Context (comprehensive background)

L - Limitations (constraints and boundaries)

E - Expected Output (specific deliverables)

A - Assumptions (stated explicitly)

R - Requirements (success criteria)

CLEAR Framework Core Principles

1. Context (Comprehensive Background)

Provide a detailed and accurate description of the problem, including relevant data and past efforts. Context enables the model to fully understand the task.

2. Limitations (Constraints and Boundaries)

Define clear boundaries for the task, such as time limits, resource constraints, or specific technologies to be used. This ensures practical and relevant outputs.

3. Expected Output (Specific Deliverables)

State precisely what you expect from the model, such as reports, analyses, or code. This helps in aligning the model’s output with your needs.

4. Assumptions (Stated Explicitly)

Outline any assumptions about the data, the task, or the desired results. Explicit assumptions align the model’s reasoning with your expectations.

5. Requirements (Success Criteria)

List clear metrics or criteria that the output must meet to be considered successful. This aids in evaluating the model’s response effectively.

Examples

Analysis Pattern

Code Generation Pattern

Do’s and Don’t with Reasoning models?

Don't Treat Them Like Chat Models

Reasoning models excel with thorough, organised input that enables deeper analysis

Avoid:

- Short, iterative questions

- Incomplete context

- Informal requests

Instead:

✅ Comprehensive, structured promptsDon't Skip the Context

Complete context leads to more accurate, relevant outputs

Avoid:

- Assuming model knowledge

- Minimal background info

- Implicit requirements

Instead:

✅ Detailed context settingDon't Rush the Process

Quality results require proper computational consideration

Avoid:

- Expecting instant responses

- Interrupting processing

- Skipping validation

Instead:

✅ Allowing thorough processing

Reasoning models signal a shift from conversational AI to analytical, problem-solving AI. The future of AI interaction may prioritize depth over speed, enabling structured and thoughtful engagement with complex tasks.

This evolution introduces opportunities for high-latency applications like deep research, intricate data analysis, and multi-step reasoning—tasks where waiting minutes or even days for a response is justified. However, it also demands new design paradigms to ensure user experiences remain seamless and delays feel worthwhile.

These methodical, high-latency models paired with thoughtful design marks a turning point in AI applications. What might seem like a limitation - taking more time to process - becomes a strength, opening new frontiers for meaningful innovation

Further Reading :

https://www.prompthub.us/blog/prompt-engineering-with-reasoning-models

https://platform.openai.com/docs/guides/reasoning#advice-on-prompting

https://www.deeplearning.ai/short-courses/reasoning-with-o1/

Listen to this article as a podcast

About HukU

Huku is all-in-one AI workspace where teams connect their tools, use all top AI models, and integrate their knowledge to amplify productivity and tackle complex projects. Our goal is to augment knowledge workers with human-centric AI experiences that accelerate Human + AI collaboration by automating 80% of repetitive tasks and elevating 20% of strategic work by 10x.